Are you already using Matomo and want to expand your web analytics in a data-driven way? Then you’ve come to the right place. Here you’ll learn how to close these gaps using Google Looker Studio and the Matomo API, taking individual web analyses to a new level.

Why Matomo?

Matomo has become widely used as an open-source web analytics solution, especially since the GDPR came into force in 2016. With a market share of 6% in Germany , Matomo ranks second, just behind Google .

As a website operator, you enjoy full control over your data thanks to independent hosting on your own infrastructure. This minimizes the risk of sharing data with third parties, as is the case with Google Analytics, and allows you to better respond to data protection requirements. However, Matomo does have limitations in certain areas: The user interface appears outdated, permission management is limited, and extensions like conversion exports often incur additional fees.

Looker Studio in combination with Matomo (API)

The biggest pain point with Matomo is often the limited reporting. This is where Looker Studio comes in: By connecting to the Matomo API, you can retrieve visit data and then store it in BigQuery (keyword: Matomo BigQuery). There, it can be transformed as needed and finally flexibly processed via Looker Studio (keyword: Matomo Looker Studio).

Technical Process

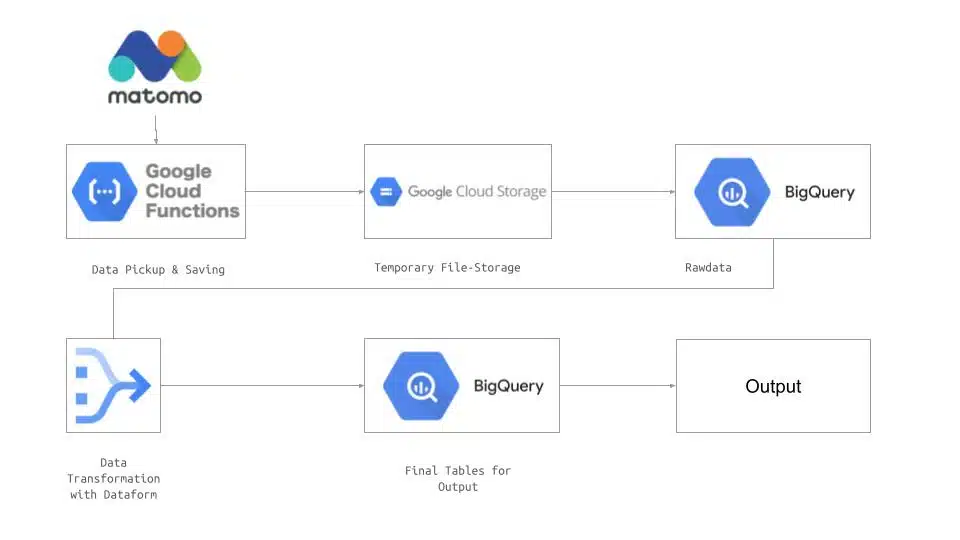

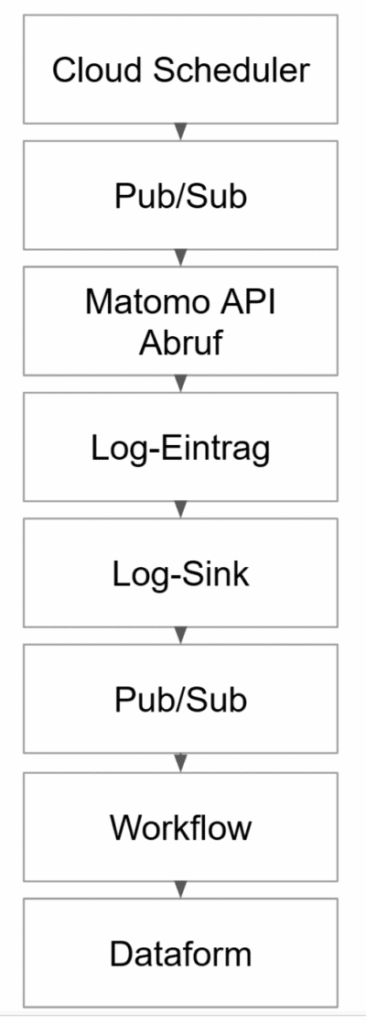

The data flow from Matomo to visualization in Looker Studio can be summarized in four steps:

- Querying the Matomo API

A Google Cloud Function regularly retrieves the data via the Live.getLastVisitsDetails endpoint. - Storage in BigQuery

The data is cached in Google Cloud Storage and then loaded into a BigQuery table. - Further processing via Dataform

A dataform package transforms your raw data and makes it available for various applications. - Output in Looker Studio

You can integrate the prepared data into Looker Studio either via a native BigQuery connection or another Cloud Function.

This way, you bypass the limitations of the Matomo interface and gain more flexibility in your web analyses.

Matomo Looker Studio

Data flow within the solution: A Google Cloud Function retrieves data from Matomo and stores it in BigQuery.

First Success: Accessing the Matomo API

To access the Matomo API, you need an API token. You create this token in your Matomo user account. The token is sent as a parameter with every request to ensure only authorized users have access.

Since Matomo version 5, API retrieval is only possible via POST request (in version 4, it was even easier via browser call).filter_limit andfilter_offset You can also control pagination to load even large amounts of data from Matomo in smaller chunks.

In practice, a Google Cloud Function usually handles the cyclical querying of Matomo data. The Cloud Function temporarily stores the data and then imports it into BigQuery. This even allows for intraday analyses. However, how often you actually retrieve your data also depends on factors such as costs and data volume. Queries every four hours have proven to be effective.

import requests

import json

import os

class Config:

url = os.environ.get("URL") # Matomo Url

token = os.environ.get("TOKEN") # Matomo API token

idSite = os.environ.get("SITEID", 1) # Matomo siteId to fetch

backfill_days = int(os.environ.get("BACKFILL", 1)) # Days of Backfill from the current day.

filter_limit = int(os.environ.get("FILTER_LIMIT", 250)) # Set the size of visitor logs you want to fetch

period = os.environ.get("PERIOD", "range") # Period String for Matomo API

location = os.environ.get("LOCATION", "europe-west3") # BigQuery and Google Cloud Storage Location

project_id = os.environ.get("PROJECT_ID") # GCP Project ID

dataset_name = os.environ.get("DATASET_NAME", "matomo") # BigQuery DataSet Name

table_name = os.environ.get("TABLE_NAME", "incoming_data") # Temporary table during the storing process

logger_name = os.environ.get("LOGGER_NAME", "matomo_api") # Logger Name for GCP Logs

bucket_name = os.environ.get("PROJECT_ID") + "_" + os.environ.get("BUCKET_NAME", "matomo_api_data") # GCP bucket

output_format = 'json' # Do not edit

filter_offset = 0 # Do not edit

headers = {}

req = {

'token_auth': Config.token,

'idsite': Config.idSite,

'period': Config.period,

'date': daterange(params.get("date_from"), params.get("date_to")),

'format': Config.output_format,

'filter_limit': str(Config.filter_limit),

'filter_offset': str(Config.filter_offset),

}

response = requests.post(

Config.url + '/index.php?module=API&method=Live.getLastVisitsDetails',

headers=headers,

data=req

)

resp = response.text

jdata = json.loads(resp)

Example API call in a Cloud Function: The Live.getLastVisitsDetails endpoint is used.

Matomo getLastVisitsDetails ≠ Raw Tracking Data

The endpoint Live.getLastVisitsDetails does not provide pure raw data, but already processed information. This includes, for example, cleaned URL parameters such as gclid, fbclid, or msclkid.

- Goals are integrated, while certain events (e.g.,

addEcommerceItem) only appear indirectly as an abandoned shopping cart (ecommerceAbandonedCart). - You should omit irrelevant fields such as icon paths or various aggregations when importing into BigQuery to keep your dataset lean.

Typical information you receive via Live.getLastVisitsDetails includes:

- Visit details: Session duration (in seconds and human-readable form), number of pages viewed, etc.

- Referrer Information: Type of referrer (search engine, direct entry, social media), name, URL, keyword.

- Device Information: Device type (desktop, tablet, smartphone), operating system, browser, installed plugins.

- Location Data: City, region, country (with flag), continent, geo-coordinates, language settings.

- Page and Event Data: Visited URLs, titles, time spent, load times, event categories and actions.

- E-Commerce Data: Product information (name, SKU, price, category), abandoned carts, order ID, revenue, taxes, shipping, discounts, ordered items.

Here you will find the complete table with all fields of theLive.getLastVisitsDetails .

With this broad range of data, you can gain deep insights into your visitors’ behavior and thus significantly improve your web analytics in e-commerce and online marketing.

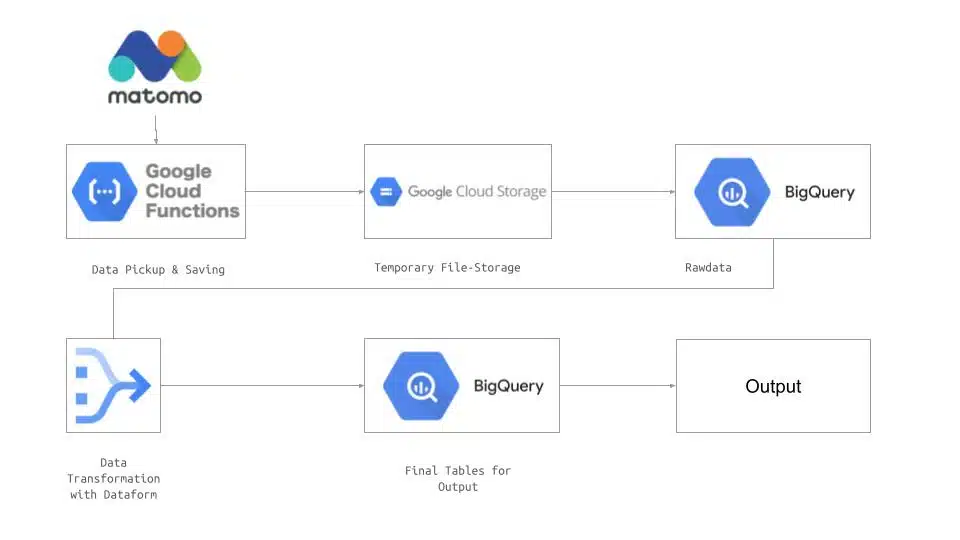

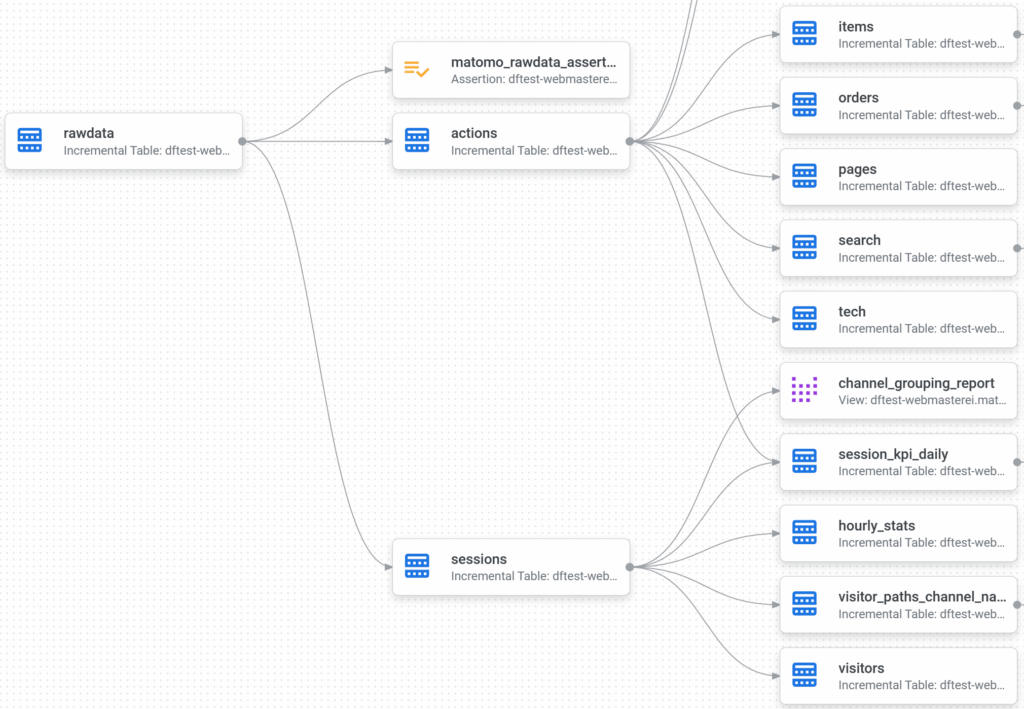

Data transformation with Dataform

To efficiently prepare your Matomo data, it’s worth taking a look at Dataform . This tool is integrated into the Google Cloud Platform and supports you in managing, automating, and orchestrating data pipelines.

Advantages of Dataform :

- Seamless Git integration for version control.

- SQL workflows can be efficiently managed and automated.

- Incremental MERGE logics simplify data updates.

- A compilation graph always gives you a visual overview of tables and dependencies.

Typically, you create two base tables from the raw data using Dataform, e.g.actions andsessions , which you can then further split or aggregate. This way, you only load the data you really need into Looker Studio – saving time and keeping your reports clear.

An example is the configuration of the tableactions . Here is:

- Incremental processing (

config.type = "incremental"). - Only a defined period is updated via partitioned tables.

- Assertions ensure that no duplicate entries are created.

- With pre_operations, certain sessions are deleted to potentially recapture incomplete visits.

- JavaScript functions enable central CASE statements (e.g., for channel names).

Data flow in Dataform: Data is loaded from the rawdata table and written into actions and sessions.

Triggers, Timing, Messages

A reliable data pipeline requires well-thought-out orchestration. Instead of fixed times, triggers and Pub/Sub messages are usually the better choice:

- Cloud Scheduler: Triggers a Pub/Sub message and starts the Cloud Function.

- Cloud Function: Loads the raw data from Matomo into BigQuery and writes a log entry upon success.

- Log sink: Detects the entry and triggers a workflow.

- Workflow: Performs an update of your Dataform package and starts Dataform.

- Looker Studio: Retrieves the updated data from BigQuery.

This ensures that no step starts before the previous one has been successfully completed.

Sequence of triggers and messages: Ensuring a smooth process.

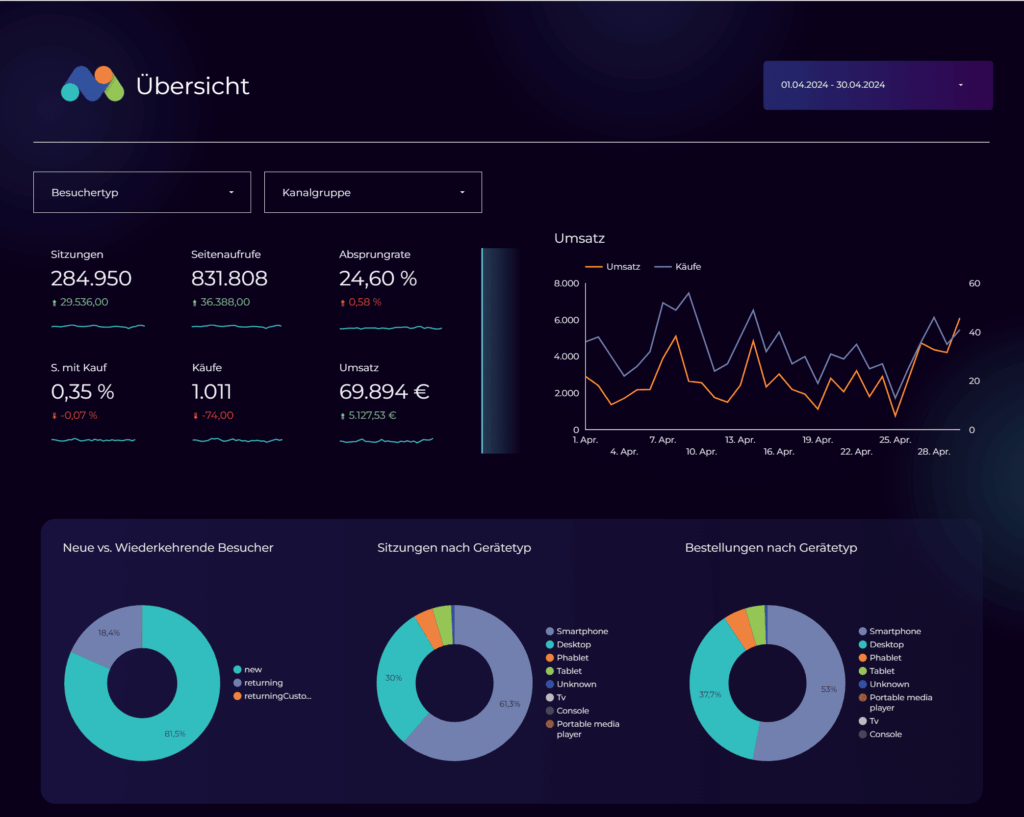

Data Usage – Looker Studio as an Example

Once your data is in BigQuery, you can analyze it with various tools. Looker Studio is a good choice because it’s free and easy to get started (a Google account is all you need). With a multi-page Looker Studio dashboard, you can view data on acquisition, behavior, e-commerce, and technical details, among other things.

With this flexible structure, you have much more freedom in compiling your reports than the Matomo interface allows.

Looker Studio Report: Overview page of the multi-page report.

Copying Dashboards with the LSD Cloner

Looker Studio itself doesn’t offer integrated versioning for dashboards. This is where the Looker Studio Dashboard Cloner (LSD Cloner) can help you:

- It copies existing dashboards using the Looker Studio Link API.

- A JSON configuration file specifies how data sources and dashboard names should be adjusted.

- The tool is a simple npm package that automatically generates a link for you.

This allows you to quickly duplicate and customize dashboards for different projects or clients.

Conclusion

With the Matomo API endpointLive.getLastVisitsDetails You gain detailed insights into your website’s visitor behavior. Combining this data with Matomo BigQuery and Matomo Looker Studio expands your web analytics capabilities far beyond the standard Matomo interface.

Especially in the e-commerce environment, this setup enables more precise conversion analysis and a deeper understanding of your user behavior. Thanks to Dataform, you can efficiently transform and incrementally update your data, while Looker Studio lets you design flexible, attractive dashboards. Overall, this solution is ideal for you if you work with Matomo professionally and want to conduct state-of-the-art web analytics.

If you need a ready-made solution, submit a request. We have developed a fully automated solution for transferring Matomo data to BigQuery .